General

Principal Software Engineer @ Just Eat Takeaway. iOS Infrastructure Engineer. Based in London.

How to Implement a Decentralised CLI Tool Manager

- CLI manager

- tool

- executable

- manager

- swift

- cli

A design to implement a simple, generic and decentralised manager for CLI tools from the perspective of a Swift dev.

SPONSORED Based on this article, I've published Luca! A lightweight decentralised tool manager for macOS to manage project-specific tool environments. 👶 Check it out: luca.tools Overview It's common for iOS teams to rely on various CLI tools such as SwiftLint, Tuist, and Fastlane. These tools are often installed in different ways. The most common way is to use Homebrew, which is known to lack version pinning and, as Pedro puts it: Homebrew is not able to install and activate multiple versions of the same tool I also fundamentally dislike the tap system for installing dependencies from third-party repositories. Although I don't have concrete data, I feel that most development teams profoundly dislike Homebrew when used beyond the simple installation of individual tools from the command line and the brew taps system is cumbersome and bizarre enough to often discourage developers from using it. Alternatives to manage sets of CLI tools that got traction in the past couple of years are Mint and Mise. As Pedro again says in his article about Mise: The first and most core feature of Mise is the ability to install and activate dev tools. Note that we say "activate" because, unlike Homebrew, Mise differentiates between installing a tool and making a specific version of it available. While beyond the scope of this article, I recommend a great article about installing Swift executables from source with Mise by Natan Rolnik. In this article I describe a CLI tool manager very similar to what I've implemented for my team. I'll simply call it "ToolManager". The tool is designed to: Support installing any external CLI tool distributed in zip archives Support activating specific versions per project Be decentralised (requiring no registry) I believe the decentralisation is an interesting aspect and makes the tool reusable in any development environment. Also, differently from the design of mise and mint, ToolManager doesn't build from source and rather relies on pre-built executables. In the age of GenAI, it's more important than ever to develop critical thinking and learn how to solve problems. For this reason, I won't show the implementation of ToolManager, as it's more important to understand how it's meant to work. The code you'll see in this article supports the overarching design, not the nitty-gritty details of how ToolManager's commands are implemented. If, by the end of the article, you understand how the system should work and are interested in implementing it (perhaps using GenAI), you should be able to convert the design to code fairly easily—hopefully, without losing the joy of coding. I myself am considering implementing ToolManager as an open source project later, as I believe it might be very helpful to many teams, just as its incarnation was (and continues to be) for the platform team at JET. There doesn't seem to be an existing tool with the design described in this article. A different title could have reasonably placed this article in "The easiest X" "series" (1, 2, 3, 4), if I may say so. Design The point here is to learn what implementing a tool manager entails. I'll therefore describe the MVP of ToolManager, leaving out details that would make the design too straightforward to implement. The tool itself is a CLI and it's reasonably implemented in Swift using ArgumentParser like all modern Swift CLI tools are. In its simplest form, ToolManager exposes 3 commands: install: download and installs the tools defined in a spec file (Toolfile.yml) at ~/.toolManager/tools optionally validating the checksum creates symlinks to the installed versions at $(PWD)/.toolManager/active uninstall: clears the entire or partial content of ~/.toolManager/tools clears the content of $(PWD)/.toolManager/active version: returns the version of the tool The install commands allows to specify the location of the spec file using the --spec flag, which defaults to Toolfile.yml in the current directory. The installation of ToolManager should be done in the most raw way, i.e. via a remote script. It'd be quite laughable to rely on Brew, wouldn't it? This practice is commonly used by a variety of tools, for example originally by Tuist (before the introduction of Mise) and... you guessed it... by Brew. We'll see below a basic script to achieve so that you could host on something lik AWS S3 with the desired public permissions. The installation command would be: curl -Ls 'https://my-bucket.s3.eu-west-1.amazonaws.com/install_toolmanager.sh' | bash The version of ToolManager must be defined in the .toolmanager-version file in order for the installation script of the repo to work: echo "1.2.0" > .toolmanager-version ToolManager manages versions of CLI tools but it's not in the business of managing its own versions. Back in the day, Tuist used to use tuistenv to solve this problem. I simply avoid it and have single version of ToolManager available at /usr/local/bin/ that the installation script overrides with the version defined for the project. The version command is used by the script to decide if a download is needed. There will be only one version of ToolManager in the system at a given time, and that's absolutely OK. At this point, it's time to show an example of installation script: #!/bin/bash set -euo pipefail # Fail fast if essential commands are missing. command -v curl >/dev/null || { echo "curl not found, please install it."; exit 1; } command -v unzip >/dev/null || { echo "unzip not found, please install it."; exit 1; } readonly EXEC_NAME="ToolManager" readonly INSTALL_DIR="/usr/local/bin" readonly EXEC_PATH="$INSTALL_DIR/$EXEC_NAME" readonly HOOK_DIR="$HOME/.toolManager" readonly REQUIRED_VERSION=$(cat .toolmanager-version) # Exit if the version file is missing or empty. if [[ -z "$REQUIRED_VERSION" ]]; then echo "Error: .toolmanager-version not found or is empty." >&2 exit 1 fi # Exit if the tool is already installed and up to date. if [[ -f "$EXEC_PATH" ]] && [[ "$($EXEC_PATH version)" == "$REQUIRED_VERSION" ]]; then echo "$EXEC_NAME version $REQUIRED_VERSION is already installed." exit 0 fi # Determine OS and the corresponding zip filename. case "$(uname -s)" in Darwin) ZIP_FILENAME="$EXEC_NAME-macOS.zip" ;; Linux) ZIP_FILENAME="$EXEC_NAME-Linux.zip" ;; *) echo "Unsupported OS: $(uname -s)" >&2; exit 1 ;; esac # Download and install in a temporary directory. TMP_DIR=$(mktemp -d) trap 'rm -rf "$TMP_DIR"' EXIT # Ensure cleanup on script exit. echo "Downloading $EXEC_NAME ($REQUIRED_VERSION)..." DOWNLOAD_URL="https://github.com/MyOrg/$EXEC_NAME/releases/download/$REQUIRED_VERSION/$ZIP_FILENAME" curl -LSsf --output "$TMP_DIR/$ZIP_FILENAME" "$DOWNLOAD_URL" unzip -o -qq "$TMP_DIR/$ZIP_FILENAME" -d "$TMP_DIR" # Use sudo only when the install directory is not writable. SUDO_CMD="" if [[ ! -w "$INSTALL_DIR" ]]; then SUDO_CMD="sudo" fi echo "Installing $EXEC_NAME to $INSTALL_DIR..." $SUDO_CMD mkdir -p "$INSTALL_DIR" $SUDO_CMD mv "$TMP_DIR/$EXEC_NAME" "$EXEC_PATH" $SUDO_CMD chmod +x "$EXEC_PATH" # Download and source the shell hook to complete installation. echo "Installing shell hook..." mkdir -p "$HOOK_DIR" curl -LSsf --output "$HOOK_DIR/shell_hook.sh" "https://my-bucket.s3.eu-west-1.amazonaws.com/shell_hook.sh" # shellcheck source=/dev/null source "$HOOK_DIR/shell_hook.sh" echo "Installation complete." You might have noticed that: the required version of ToolManager (defined in .toolmanager-version) is downloaded from the release from the corresponding GitHub repository if missing locally. The ToolManager repo should have a GHA workflow in place to build, archive and upload the version. a shell_hook script is downloaded and run to insert the following line in the shell profile: [[ -s "$HOME/.toolManager/shell_hook.sh" ]] && source "$HOME/.toolManager/shell_hook.sh". This allows switching location in the terminal and loading the active tools for the current project. Showing an example of shell_hook.sh is in order: #!/bin/bash # Overrides 'cd' to update PATH when entering a directory with a local tool setup. # Add the project-specific bin directory to PATH if it exists. update_tool_path() { local tool_bin_dir="$PWD/.toolManager/active" if [[ -d "$tool_bin_dir" ]]; then export PATH="$tool_bin_dir:$PATH" fi } # Redefine 'cd' to trigger the path update after changing directories. cd() { builtin cd "$@" || return update_tool_path } # --- Installation Logic --- # The following function only runs when this script is sourced by an installer. install_hook() { local rc_file case "${SHELL##*/}" in bash) rc_file="$HOME/.bashrc" ;; zsh) rc_file="$HOME/.zshrc" ;; *) echo "Unsupported shell for hook installation: $SHELL" >&2 return 1 ;; esac # The line to add to the shell's startup file. local hook_line="[[ -s \"$HOME/.toolManager/shell_hook.sh\" ]] && source \"$HOME/.toolManager/shell_hook.sh\"" # Add the hook if it's not already present. if ! grep -Fxq "$hook_line" "$rc_file" &>/dev/null; then printf "\n%s\n" "$hook_line" >> "$rc_file" echo "Shell hook installed in $rc_file. Restart your shell to apply changes." fi } # This check ensures 'install_hook' only runs when sourced, not when executed. if [[ "${BASH_SOURCE[0]}" != "$0" ]]; then install_hook fi Now that we have a working installation of ToolManager, let define our Toolfile.yml in our project folder: --- tools: - name: PackageGenerator binaryPath: PackageGenerator version: 3.3.0 zipUrl: https://github.com/justeattakeaway/PackageGenerator/releases/download/3.3.0/PackageGenerator-macOS.zip - name: SwiftLint binaryPath: swiftlint version: 0.57.0 zipUrl: https://github.com/realm/SwiftLint/releases/download/0.58.2/portable_swiftlint.zip - name: ToggleGen binaryPath: ToggleGen version: 1.0.0 zipUrl: https://github.com/TogglesPlatform/ToggleGen/releases/download/1.0.0/ToggleGen-macOS-universal-binary.zip - name: Tuist binaryPath: tuist version: 4.48.0 zipUrl: https://github.com/tuist/tuist/releases/download/4.54.3/tuist.zip - name: Sourcery binaryPath: bin/sourcery version: 2.2.5 zipUrl: https://github.com/krzysztofzablocki/Sourcery/releases/download/2.2.5/sourcery-2.2.5.zip The install command of ToolManager loads the Toolfile at the root of the repo and for each defined dependency, performs the following: checks if the version of the dependency already exists on the machine if it doesn’t exist, downloads it, unzips it, and places the binary at ~/.toolManager/tools/ (e.g. ~/.toolManager/tools/PackageGenerator/3.3.0/PackageGenerator) creates a symlink to the binary in the project directory from .toolManager/active (e.g. .toolManager/active/PackageGenerator) After running ToolManager install (or ToolManager install --spec=Toolfile.yml), ToolManager should produce the following structure ~ tree ~/.toolManager/tools -L 2 ├── PackageGenerator │ └── 3.3.0 ├── Sourcery │ └── 2.2.5 ├── SwiftLint │ └── 0.57.0 ├── ToggleGen │ └── 1.0.0 └── Tuist └── 4.48.0 and from the project folder ls -la .toolManager/active <redacted> PackageGenerator -> /Users/alberto/.toolManager/tools/PackageGenerator/3.3.0/PackageGenerator <redacted> Sourcery -> /Users/alberto/.toolManager/tools/Sourcery/2.2.5/Sourcery <redacted> SwiftLint -> /Users/alberto/.toolManager/tools/SwiftLint/0.57.0/SwiftLint <redacted> ToggleGen -> /Users/alberto/.toolManager/tools/ToggleGen/1.0.0/ToggleGen <redacted> Tuist -> /Users/alberto/.toolManager/tools/Tuist/4.48.0/Tuist Bumping the versions of some tools in the Toolfile, for example SwiftLint and Tuist, and re-running the install command, should result in the following: ~ tree ~/.toolManager/tools -L 2 ├── PackageGenerator │ └── 3.3.0 ├── Sourcery │ └── 2.2.5 ├── SwiftLint │ ├── 0.57.0 │ └── 0.58.2 ├── ToggleGen │ └── 1.0.0 └── Tuist ├── 4.48.0 └── 4.54.3 ls -la .toolManager/active <redacted> PackageGenerator -> /Users/alberto/.toolManager/tools/PackageGenerator/3.3.0/PackageGenerator <redacted> Sourcery -> /Users/alberto/.toolManager/tools/Sourcery/2.2.5/Sourcery <redacted> SwiftLint -> /Users/alberto/.toolManager/tools/SwiftLint/0.58.2/SwiftLint <redacted> ToggleGen -> /Users/alberto/.toolManager/tools/ToggleGen/1.0.0/ToggleGen <redacted> Tuist -> /Users/alberto/.toolManager/tools/Tuist/4.54.3/Tuist CI Setup On CI, the setup is quite simple. It involves 2 steps: install ToolManager install the tools The commands can be wrapped in GitHub composite actions: name: Install ToolManager runs: using: composite steps: - name: Install ToolManager shell: bash run: curl -Ls 'https://my-bucket.s3.eu-west-1.amazonaws.com/install_toolmanager.sh' | bash name: Install tools inputs: spec: description: The name of the ToolManager spec file required: false default: Toolfile.yml runs: using: composite steps: - name: Install tools shell: bash run: | ToolManager install --spec=${{ inputs.spec }} echo "$PWD/.toolManager/active" >> $GITHUB_PATH simply used in workflows: - name: Install ToolManager uses: ./.github/actions/install-toolmanager - name: Install tools uses: ./.github/actions/install-tools with: spec: Toolfile.yml CLI tools conformance ToolManager can install tools that are made available in zip files, without the need of implementing any particular spec. Depending on the CLI tool, the executable can be at the root of the zip archive or in a subfolder. Sourcery for example places the executable in the bin folder. - name: Sourcery binaryPath: bin/sourcery version: 2.2.5 zipUrl: https://github.com/krzysztofzablocki/Sourcery/releases/download/2.2.5/sourcery-2.2.5.zip GitHub releases are great to host releases as zip files and that's all we need. Ideally, one should decorate the repositories with appropriate release workflows. Following is a simple example that builds a macOS binary. It could be extended to also create a Linux binary. name: Publish Release on: push: tags: - '*' env: CLI_NAME: my-awesome-cli-tool permissions: contents: write jobs: build-and-archive: name: Build and Archive macOS Binary runs-on: macos-latest steps: - name: Checkout repository uses: actions/checkout@v4 - name: Setup Xcode uses: maxim-lobanov/setup-xcode@v1 with: xcode-version: '16.4' - name: Build universal binary run: swift build -c release --arch arm64 --arch x86_64 - name: Archive the binary run: | cd .build/apple/Products/Release/ zip -r "${{ env.CLI_NAME }}-macOS.zip" "${{ env.CLI_NAME }}" - name: Upload artifact for release uses: actions/upload-artifact@v4 with: name: cli-artifact path: .build/apple/Products/Release/${{ env.CLI_NAME }}-macOS.zip create-release: name: Create GitHub Release needs: [build-and-archive] runs-on: ubuntu-latest steps: - name: Download CLI artifact uses: actions/download-artifact@v4 with: name: cli-artifact - name: Create Release and Upload Asset uses: softprops/action-gh-release@v2 with: files: "${{ env.CLI_NAME }}-macOS.zip" A note on version pinning Dependency management systems tend to use a lock file (like Package.resolved in Swift Package manager, Podfile.lock in the old days of CocoaPods, yarn.lock/package-lock.json in JavaScript, etc.). The benefits of using a lock file are mainly 2: Reproducibility It locks the exact versions (including transitive dependencies) so that every team member, CI server, or production environment installs the same versions. Faster installs Dependency managers can skip version resolution if a lock file is present, using it directly to fetch the exact versions, improving speed. We can remove the need for lock files if we pin the versions in the spec (the file defining the tools). If version range operators like the CocoaPods' optimistic operator ~> and the SPM's .upToNextMajor and similar one didn't exist, usages of lock files would lose its utility. While useful, lock files are generally annoying and can create that odd feeling of seeing unexpected updates in pull requests made by others. ToolManager doesn't use a lock file; instead, it requires teams to pin their tools' versions, which I strongly believe is a good practice. This approach comes at the cost of teams having to keep an eye out for patch releases and not leaving updates to the machine, which risks pulling in dependencies that don't respect Semantic Versioning (SemVer). Support for different architectures This design allows to support different architectures. Some CI workflows might only need a Linux runner to reduce the burden on precious macOS instances. Both macOS and Linux can be supported with individual Toolfile that can be specified when running the install command. # on macOS ToolManager install --spec=Toolfile_macOS # on Linux ToolManager install --spec=Toolfile_Linux Conclusion The design described in this article powers the solution implemented at JET and has served our teams successfully since October 2023. JET has always preferred to implement in-house solutions where possible and sensible, and I can say that moving away from Homebrew was a blessing. With this design, the work usually done by a package manager and a central spec repository is shifted to individual components that are only required to publish releases in zip archives, ideally via a release workflow. By decentralising and requiring version pinning, we made ToolManager a simple yet powerful system for managing the installation of CLI tools.

How to setup a Swift Package Registry in Artifactory

- swift

- registry

- artifactory

- package

A quick guide to setting up a Swift Package Registry with Artifactory to speed up builds and streamline dependency management.

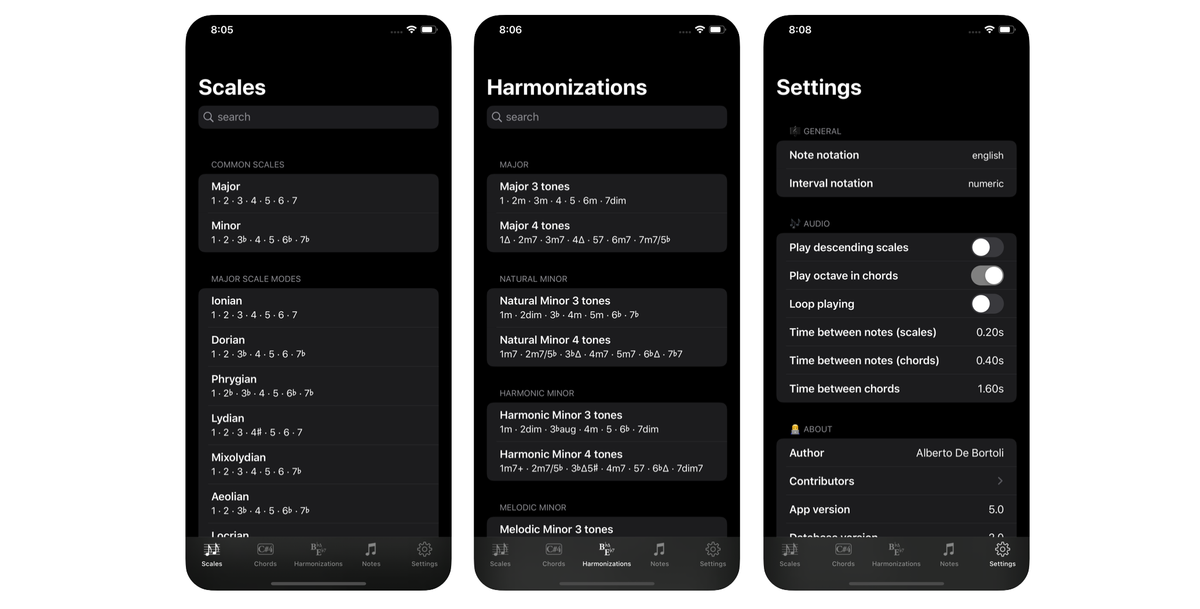

Introduction It's very difficult to have GenAI not hallucinate when in comes to Swift Package Registry. No surprise there: the feature is definitely niche, has not been vastly adopted and there's a lack of examples online. As Dave put it, Swift Package Registries had an even rockier start compared to SPM. I've recently implemented a Swift Package Registry on Artifactory for my team and I thought of summarising my experience here since it's still fresh in my head. While some details are left out, the happy path should be covered. I hope with this article to help you all indirectly by providing more material to the LLMs overlords. Problem The main problem that led us to look into Swift Package Registry is due to SPM deep-cloning entire Git repositories for each dependency, which became time-consuming. Our CI jobs took a few minutes just to pull all the Swift packages. For dependencies with very large repositories, such as SendbirdUIKit (which is more than 2GB), one could rely on pre-compiled XCFrameworks as a workaround. Airbnb provides a workaround via the SPM-specific repo for Lottie. A Swift Registry allows to serve dependencies as zip artifacts containing only the required revision, avoiding the deep clone of the git repositories. What is a Swift Package Registry? A Swift Package Registry is a server that stores and vends Swift packages by implementing SE-0292 and the corresponding specification. Instead of relying on Git repositories to source our dependencies, we can use a registry to download them as versioned archives (zip files). swift-package-manager/Documentation/PackageRegistry/PackageRegistryUsage.md at main · swiftlang/swift-package-manager The Package Manager for the Swift Programming Language - swiftlang/swift-package-manager GitHubswiftlang The primary advantages of using a Swift Package Registry are: Reduced CI/CD Pipeline Times: by fetching lightweight zip archives from the registry rather than cloning the entire repositories from GitHub. Improved Developer Machine Performance: the same time savings on CI are reflected on the developers' machines during dependency resolution. Availability: by hosting a registry, teams are no longer dependent on the availability of external source control systems like GitHub, but rather on internal ones (for example, self-hosted Artifactory). Security: injecting vulnerabilities in popular open-source projects is known as a supply chain attack and has become increasingly popular in recent years. A registry allows to adopt a process to trust the sources published on it. Platforms Apple has accepted the Swift Registry specification and implemented support to interact with registries within SPM but has left the implementation of actual registries to third-party platforms. Apple is not in the business of providing a Swift Registry. The main platform having adopted Swift Registries is Artifactory. Artifactory, Your Swift Package Repository JFrog now offers the first and only Swift binary package repository, enabling developers to use JFrog Artifactory for resolving Swift dependencies instead of enterprise source control (Git) systems. JFroggiannit although AWS CodeArtifact, Cloudsmith and Tuist provide support too: New – Add Your Swift Packages to AWS CodeArtifact | Amazon Web Services Starting today, Swift developers who write code for Apple platforms (iOS, iPadOS, macOS, tvOS, watchOS, or visionOS) or for Swift applications running on the server side can use AWS CodeArtifact to securely store and retrieve their package dependencies. CodeArtifact integrates with standard developer tools such as Xcode, xcodebuild, and the Swift Package Manager (the swift […] Amazon Web ServicesSébastien Stormacq Private, secure, hosted Swift registry Cloudsmith offers secure, private Swift registries as a service, with cloud native performance. Book a demo today. Cloudsmith Announcing Tuist Registry We’re thrilled to announce the launch of the Tuist Registry – a new feature that optimizes the resolution of Swift packages in your projects. TuistMarek Fořt The benefits are usually appealing to teams with large apps, hence it's reasonable to believe that only big companies have looked into adopting a registry successfully. Artifactory Setup Let's assume a JFrog Artifactory to host our Swift Package Registry exists at https://packages.acme.com. Artifactory support local, remote, and virtual repositories but a realistic setup consists of only local and virtual repositories. Source: Artifactory Local Repositories are meant to be used for publishing dependencies from CI pipelines. Virtual Repositories are instead meant to be used for resolving (pulling) dependencies on both CI and the developers' machines. Remote repositories are not really relevant in a typical Swift Registry setup. Following the documentation at https://jfrog.com/help/r/jfrog-artifactory-documentation/set-up-a-swift-registry, let's create 2 repositories with the following names: local repository: swift-local virtual repository: swift-virtual Local Setup To pull dependencies from the Swift Package Registry, we need to configure the local environment. 1. Set the Registry URL First, we need to inform SPM about the existence of the registry. We can do this on a per-project basis or globally for the user account. From a package's root directory, run the following command. This will create a .swiftpm/configuration/registries.json file within your project folder. swift package-registry set "https://packages.acme.com/artifactory/api/swift/swift-virtual" The resulting registries.json file will look like this: { "authentication": {}, "registries": { "[default]": { "supportsAvailability": false, "url": "https://packages.acme.com/artifactory/api/swift/swift-virtual" } }, "version": 1 } To set the registry for all your projects, use the --global flag. swift package-registry set --global "https://packages.acme.com/artifactory/api/swift/swift-virtual" This will create the configuration file at ~/.swiftpm/configuration/registries.json. Xcode projects don't support project-level registries nor (in my experience) support scopes other than the default one (i.e. avoid using the --scope flag). 2. Authentication To pull packages, authenticating with Artifactory is usually required. It's reasonable though that your company allows all artifacts from Artifactory to be read without authentication as long as one is connected to the company VPN. In cases where authentication is required, SPM uses a .netrc file in the home directory to find credentials for remote servers. This file is a standard way to handle login information for various network protocols. Using a token generated from the Artifactory dashboard, the line to add to the .netrc file would be: machine packages.acme.com login <your_artifactory_username> password <your_artifactory_token> Alternatively, it's possible to log in using the swift package-registry login command. This command securely stores your token in the system's keychain. swift package-registry login "https://packages.acme.com/artifactory/api/swift/swift-virtual" \ --token <token> # or swift package-registry login "https://packages.acme.com/artifactory/api/swift/swift-virtual" \ --username <username> \ --password <token_treated_as_password> CI/CD Setup On CI, the setup is slightly different as the goals are: to resolve dependencies in CI/CD jobs to publish new package versions in CD jobs for both internal and external dependencies The steps described for the local setup are valid for the resolution on CI too. The interesting part here is how publishing is done. I will assume the usage of GitHub Actions. 1. Retrieving the Artifactory Token The JFrog CLI can be used via the setup-jfrog-cli action to authenticate using the most appropriate method. You might want to wrap the action in a custom composable one exporting the token as the output of a step: TOKEN=$(jf config export) echo "::add-mask::$TOKEN" echo "artifactory-token=$TOKEN">> "$GITHUB_OUTPUT" 2. Logging into the Registry The CI job must log in to the local repository (swift-local) to gain push permissions. The token retrieved in the previous step is used for this purpose. swift package-registry login \ "https://packages.acme.com/artifactory/api/swift/swift-local" \ --token ${{ steps.get-token.outputs.artifactory-token }} 3. Publishing Packages Swift Registry requires archives created with the swift package archive-source command from the dependency folder. E.g. swift package archive-source -o "Alamofire-5.10.2.zip" We could avoid creating the archive and instead download it directly from GitHub releases. curl -L -o Alamofire-5.10.1.zip \ https://github.com/Alamofire/Alamofire/archive/refs/tags/5.10.1.zip Uploading the archive can then be done by using the JFrog CLI that needs customization via the setup-jfrog-cli action. If going down this route, the upload command would be: jf rt upload Alamofire-5.10.1.zip \ https://packages.acme.com/artifactory/api/swift/swift-local/acme/Alamofire/Alamofire-5.10.1.zip There is a specific structure to respect: <REPOSITORY>/<SCOPE>/<NAME>/<NAME>-<VERSION>.zip which is the last part of the above URL: swift-local/acme/Alamofire/Alamofire-5.10.1.zip Too bad that using the steps above causes a downstream problem with SPM not being able to resolve the dependencies in the registry. I tried extensively and couldn't find the reason why SPM wouldn't be happy with how the packages were published. I might have missed something but eventually I necessarily had to switch to use the publish command. Using the swift package-registry publish command instead, doesn't present this issue hence it's the solution adopted in this workflow. swift package-registry publish acme.Alamofire 5.10.1 \ --url https://packages.acme.com/artifactory/api/swift/swift-local \ --scratch-directory $(mktemp -d) To verify the upload and indexing succeeded, check that the uploaded *.zip artifact is available and that the .swift exists (indication that the indexing has occurred). If the specific structure is not respected, the .swift folder wouldn't be generated. Consuming Packages from the Registry Packages The easiest and only documented way to consume a package from a registry is via a Package. In the Package.swift file, declare dependencies using the .package(id:from:) syntax to declare a registry-based dependency. The id is a combination of the scope and the package name. ... dependencies: [ .package(id: "acme.Alamofire", from: "5.10.1"), ], targets: [ .target( name: "MyApp", dependencies: [ .product(name: "Alamofire", package: "acme.Alamofire"), ] ), ... ] ) Run swift package resolve or simply build the Package in Xcode to pull the dependencies. You might bump into transitive dependencies (i.e. dependencies listed in the Package.swift files of the packages published on the registry) pointing to GitHub. In this case, it'd be great to instruct SPM to use the corresponding versions on the registry. The --replace-scm-with-registry flag is designed to work for the entire dependency graph, including transitive dependencies. The cornerstone of associating a registry-hosted package with its GitHub origin is the package-metadata.json file. This file allows to provide essential metadata about the packages at the time of publishing (the --metadata-path flag of the publish command defaults to package-metadata.json). Crucially, it includes a field to specify the source control repository URLs. When swift package resolve --replace-scm-with-registry is executed, SPM queries the configured registry. The registry then uses the information from the package-metadata.json to map the package identity to its corresponding GitHub URL, enabling a smooth and transparent resolution process. The metadata file must conform to the JSON schema defined in SE-0391. It is recommended to include all URL variations (e.g., SSH, HTTPS) for the same repository. E.g. { "repositoryURLs": [ "https://github.com/Alamofire/Alamofire", "https://github.com/Alamofire/Alamofire.git", "git@github.com:Alamofire/Alamofire.git" ] } Printing the dependencies should confirm the source of the dependencies: swift package show-dependencies --replace-scm-with-registry When loading a package with Xcode, the flag can be enabled via an environment variable in the scheme IDEPackageDependencySCMToRegistryTransformation=useRegistryIdentityAndSources Too bad that for packages, the schemes won't load until SPM completes the resolution hence running the following from the terminal would address the issue: defaults write com.apple.dt.Xcode IDEPackageDependencySCMToRegistryTransformation useRegistryIdentityAndSources that can be unset with: defaults delete com.apple.dt.Xcode IDEPackageDependencySCMToRegistryTransformation Xcode It's likely that you'll want to use the registry from Xcode projects for direct dependencies. If using the Tuist registry, it seems you would be able to leverage a Package Collection to add dependencies from the registry from the Xcode UI. Note that until Xcode 26 Beta 1, it's not possible to add registry dependencies directly in the Xcode UI, but if you use Tuist to generate your project (as you should), you can use the Package.registry (introduced with https://github.com/tuist/tuist/pull/7225). E.g. let project = Project( ... packages: [ .registry( identifier: "acme.Alamofire", requirement: .exact(Version(stringLiteral: "5.10.1")) ) ], ... ) If not using Tuist, you'd have to rely on setting IDEPackageDependencySCMToRegistryTransformation either as an environment variable in the scheme or globally via the terminal. You can also use xcodebuild to resolve dependencies using the correct flag: xcodebuild \ -resolvePackageDependencies \ -packageDependencySCMToRegistryTransformation useRegistryIdentityAndSources Conclusions We’ve found that using an in-house Swift registry drastically reduces dependency resolution time and size on disk by downloading only the required revision instead of the entire, potentially large, Git repository. This improvement benefits both CI pipelines and developers’ local environments. Additionally, registries help mitigate the risk of supply chain attacks. As of this writing, Swift registries are not widely adopted, which is reflected in the limited number of platforms that support them. It also shows various bugs I myself bumped into when using particular configurations. source: https://forums.swift.org/t/package-registry-support-in-xcode/73626/19 It's unclear whether adoption will grow and uncertain if Apple will ever address the issues reported by the community, but when a functioning setup is put in place, registries offer an efficient and secure alternative to using XCFrameworks in production builds and reduce both memory and time footprints.

Scalable Continuous Integration for iOS

- CI

- mobile

- iOS

- AWS

- macOS

How Just Eat Takeaway.com leverage AWS, Packer, Terraform and GitHub Actions to manage a CI stack of macOS runners.

Originally published on the Just Eat Takeaway Engineering Blog. How Just Eat Takeaway.com leverage AWS, Packer, Terraform and GitHub Actions to manage a CI stack of macOS runners. Problem At Just Eat Takeaway.com (JET), our journey through continuous integration (CI) reflects a landscape of innovation and adaptation. Historically, JET’s multiple iOS teams operated independently, each employing their distinct CI solutions. The original Just Eat iOS and Android teams had pioneered an in-house CI solution anchored in Jenkins. This setup, detailed in our 2021 article, served as the backbone of our CI practices up until 2020. It was during this period that the iOS team initiated a pivotal migration: moving from in-house Mac Pros and Mac Minis to AWS EC2 macOS instances. Fast forward to 2023, a significant transition occurred within our Continuous Delivery Engineering (CDE) Platform Engineering team. The decision to adopt GitHub Actions company-wide has marked the end of our reliance on Jenkins while other teams are in the process of migrating away from solutions such as CircleCI and GitLab CI. This transition represented a fundamental shift in our CI philosophy. By moving away from Jenkins, we eliminated the need to maintain an instance for the Jenkins server and the complexities of managing how agents connected to it. Our focus then shifted to transforming our Jenkins pipelines into GitHub Actions workflows. This transformation extended beyond mere tool adoption. Our primary goal was to ensure that our macOS instances were not only scalable but also configured in code. We therefore enhanced our global CI practices and set standards across the entire company. Desired state of CI As we embarked on our journey to refine and elevate our CI process, we envisioned a state-of-the-art CI system. Our goals were ambitious yet clear, focusing on scalability, automation, and efficiency. At the time of implementing the system, no other player in the industry seemed to have implemented the complete solution we envisioned. Below is a summary of our desired CI state: Instance setup in code: One primary objective was to enable the definition of the setup of the instances entirely in code. This includes specifying macOS version, Xcode version, Ruby version, and other crucial configurations. For this purpose, the HashiCorp tool Packer, emerged once again as an ideal solution, offering the flexibility and precision we required. IaC (Infrastructure as Code) for macOS instances: To define the infrastructure of our fleet of macOS instances, we leaned towards Terraform, another HashiCorp tool. Terraform provided us with the capability to not only deploy but also to scale and migrate our infrastructure seamlessly, crucially maintaining its state. Auto and Manual Scaling: We wanted the ability to dynamically create CI runners based on demand, ensuring that resources were optimally utilized and available when needed. To optimize resource utilization, especially during off-peak hours, we desired an autoscaling feature. Scaling down our CI runners on weekends when developer activity is minimal was critical to be cost-effective. Automated Connection to GitHub Actions: We aimed for the instances to automatically connect to GitHub Actions as runners upon deployment. This automation was crucial in eliminating manual interventions via SSH or VNC. Multi-Team Use: Our vision included CI runners that could be easily used by multiple teams across different time zones. This would not only maximize the utility of our infrastructure but also encourage reuse and standardization. Centralized Management via GitHub Actions: To further streamline our CI processes, we intended to run all tasks through GitHub Actions workflows. This approach would allow the teams to self-serve and alleviate the need for developers to use Docker or maintain local environments. Getting to the desired state was a journey that presented multiple challenges and constant adjustments to make sure we could migrate smoothly to a new system. Instance setup in code We implemented the desired configuration with Packer leveraging a number of Shell Provisioners and variables to configure the instance. Here are some of the configuration steps: Set user password (to allow remote desktop access) Resize the partition to use all the space available on the EBS volume Start the Apple Remote Desktop agent and enable remote desktop access Update Brew & Install Brew packages Install CloudWatch agent Install rbenv/Ruby/bundler Install Xcode versions Install GitHub Actions actions-runner Copy scripts to connect to GitHub Actions as a runner Copy daemon to start the GitHub Actions self-hosted runner as a service Set macos-init modules to perform provisioning of the first launch While the steps above are naturally configuration steps to perform when creating the AMI, the macos-init modules include steps to perform once the instance becomes available. The create_ami workflow accepts inputs that are eventually passed to Packer to generate the AMI. packer build \ --var ami_name_prefix=${{ env.AMI_NAME_PREFIX }} \ --var region=${{ env.REGION }} \ --var subnet_id=${{ env.SUBNET_ID }} \ --var vpc_id=${{ env.VPC_ID }} \ --var root_volume_size_gb=${{ env.ROOT_VOLUME_SIZE_GB }} \ --var macos_version=${{ inputs.macos-version}} \ --var ruby_version=${{ inputs.ruby-version }} \ --var xcode_versions='${{ steps.parse-xcode-versions.outputs.list }}' \ --var gha_version=${{ inputs.gha-version}} \ bare-metal-runner.pkr.hcl Different teams often use different versions of software, like Xcode. To accommodate this, we permit multiple versions to be installed on the same instance. The choice of which version to use is then determined within the GitHub Actions workflows. The seamless generation of AMIs has proven to be a significant enabler. For example, when Xcode 15.1 was released, we executed this workflow the same evening. In just over two hours, we had an AMI ready to deploy all the runners (it usually takes 70–100 minutes for a macOS AMI with 400GB of EBS volume to become ready after creation). This efficiency enabled our teams to use the new Xcode version just a few hours after its release. IaC (Infrastructure as Code) for macOS instances Initially, we used distinct Terraform modules for each instance to facilitate the deployment and decommissioning of each one. Given the high cost of EC2 Mac instances, we managed this process with caution, carefully balancing host usage while also being mindful of the 24-hour minimum allocation time. We ultimately ended up using Terraform to define a single infrastructure (i.e. a single Terraform module) defining resources such as: aws_key_pair, aws_instance, aws_ami aws_security_group, aws_security_group_rule aws_secretsmanager_secret aws_vpc, aws_subnet aws_cloudwatch_metric_alarm aws_sns_topic, aws_sns_topic_subscription aws_iam_role, aws_iam_policy, aws_iam_role_policy_attachment, aws_iam_instance_profile A crucial part was to use count in aws_instance, setting the value of a variable passed in from deploy_infra workflow. Terraform performs the necessary scaling upon changing the value. We have implemented a workflow to perform Terraform apply and destroy commands for the infrastructure. Only the AMI and the number of instances are required as inputs. terraform ${{ inputs.command }} \ --var ami_name=${{ inputs.ami-name }} \ --var fleet_size=${{ inputs.fleet-size }} \ --auto-approve Using the name of the AMI instead of the ID allows us to use the most recent one that was generated, useful in case of name clashes. variable "ami_name" { type = string } variable "fleet_size" { type = number } data "aws_ami" "bare_metal_gha_runner" { most_recent = true filter { name = "name" values = ["${var.ami_name}"] } ... } resource "aws_instance" "bare_metal" { count = var.fleet_size ami = data.aws_ami.bare_metal_gha_runner.id instance_type = "mac2.metal" tenancy = "host" key_name = aws_key_pair.bare_metal.key_name ... } Instead of maintaining multiple CI instances with varying software configurations, we concluded that it’s simpler and more efficient to have a single, standardised setup. While teams still have the option to create and deploy their unique setups, a smaller, unified system allows for easier support by a single global configuration. Auto and Manual Scaling The deploy_infra workflow allows us to scale on demand but it doesn’t release the underlying dedicated hosts which are the resources that are ultimately billed. The autoscaling solution provided by AWS is great for VMs but gets sensibly more complex when actioned on dedicated hosts. Auto Scaling groups on macOS instances would require a Custom Managed License, a Host Resource Group and, of course, a Launch Template. Using only AWS services appears to be a lot of work to pull things together and the result wouldn’t allow for automatic release of the dedicated hosts. AirBnb mention in their Flexible Continuous Integration for iOS article that an internal scaling service was implemented: An internal scaling service manages the desired capacity of each environment’s Auto Scaling group. Some articles explain how to set up Auto Scaling groups for mac instances (see 1 and 2) but after careful consideration, we agreed that implementing a simple scaling service via GitHub Actions (GHA) was the easiest and most maintainable solution. We implemented 2 GHA workflows to fully automate the weekend autoscaling: Upscaling workflow to n, triggered at a specific time at the beginning of the working week Downscaling workflow to 1, triggered at a specific time at the beginning of the weekend We retain the capability for manual scaling, which is essential for situations where we need to scale down, such as on bank holidays, or scale up, like on release cut days, when activity typically exceeds the usual levels. Additionally, we have implemented a workflow that runs multiple times a day and tries to release all available hosts without an instance attached. This step lifts us from the burden of having to remember to release the hosts. Dedicated hosts take up to 110 minutes to move from the Pending to the Available state due to the scrubbing workflow performed by AWS. Manual scaling can be executed between the times the autoscaling workflows are triggered and they must be resilient to unexpected statuses of the infrastructure, which thankfully Terraform takes care of. Both down and upscaling are covered in the following flowchart: The autoscaling values are defined as configuration variables in the repo settings: It usually takes ~8 minutes for an EC2 mac2.metal instance to become reachable after creation, meaning that we can redeploy the entire infrastructure very quickly. Automated Connection to GitHub Actions We provide some user data when deploying the instances. resource "aws_instance" "bare_metal" { ami = data.aws_ami.bare_metal_gha_runner.id count = var.fleet_size ... user_data = <<EOF { "github_enterprise": "<GHE_ENTERPRISE_NAME>", "github_pat_secret_manager_arn": ${data.aws_secretsmanager_secret_version.ghe_pat.arn}, "github_url": "<GHE_ENTERPRISE_URL>", "runner_group": "CI-MobileTeams", "runner_name": "bare-metal-runner-${count.index + 1}" } EOF The user data is stored in a specific folder by macos-init and we implement a module to copy the content to ~/actions-runner-config.json. ### Group 10 ### [[Module]] Name = "Create actions-runner-config.json from userdata" PriorityGroup = 10 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", 'instanceId="$(curl http://169.254.169.254/latest/meta-data/instance-id)"; if [[ ! -z $instanceId ]]; then cp /usr/local/aws/ec2-macos-init/instances/$instanceId/userdata ~/actions-runner-config.json; fi'] RunAsUser = "ec2-user" which is in turn used by the configure_runner.sh script to configure the GitHub Actions runner. #!/bin/bash GITHUB_ENTERPRISE=$(cat $HOME/actions-runner-config.json | jq -r .github_enterprise) GITHUB_PAT_SECRET_MANAGER_ARN=$(cat $HOME/actions-runner-config.json | jq -r .github_pat_secret_manager_arn) GITHUB_PAT=$(aws secretsmanager get-secret-value --secret-id $GITHUB_PAT_SECRET_MANAGER_ARN | jq -r .SecretString) GITHUB_URL=$(cat $HOME/actions-runner-config.json | jq -r .github_url) RUNNER_GROUP=$(cat $HOME/actions-runner-config.json | jq -r .runner_group) RUNNER_NAME=$(cat $HOME/actions-runner-config.json | jq -r .runner_name) RUNNER_JOIN_TOKEN=` curl -L \ -X POST \ -H "Accept: application/vnd.github+json" \ -H "Authorization: Bearer $GITHUB_PAT"\ $GITHUB_URL/api/v3/enterprises/$GITHUB_ENTERPRISE/actions/runners/registration-token | jq -r '.token'` MACOS_VERSION=`sw_vers -productVersion` XCODE_VERSIONS=`find /Applications -type d -name "Xcode-*" -maxdepth 1 \ -exec basename {} \; \ | tr '\n' ',' \ | sed 's/,$/\n/' \ | sed 's/.app//g'` $HOME/actions-runner/config.sh \ --unattended \ --url $GITHUB_URL/enterprises/$GITHUB_ENTERPRISE \ --token $RUNNER_JOIN_TOKEN \ --runnergroup $RUNNER_GROUP \ --labels ec2,bare-metal,$RUNNER_NAME,macOS-$MACOS_VERSION,$XCODE_VERSIONS \ --name $RUNNER_NAME \ --replace The above script is run by a macos-init module. ### Group 11 ### [[Module]] Name = "Configure the GHA runner" PriorityGroup = 11 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", "/Users/ec2-user/configure_runner.sh"] RunAsUser = "ec2-user" The GitHub documentation states that it’s possible to create a customized service starting from a provided template. It took some research and various attempts to find the right configuration that allows the connection without having to log in in the UI (over VNC) which would represent a blocker for a complete automation of the deployment. We believe that the single person who managed to get this right is Sébastien Stormacq who provided the correct solution. The connection to GHA can be completed with 2 more modules that install the runner as a service and load the custom daemon. ### Group 12 ### [[Module]] Name = "Run the self-hosted runner application as a service" PriorityGroup = 12 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["/bin/zsh", "-c", "cd /Users/ec2-user/actions-runner && ./svc.sh install"] RunAsUser = "ec2-user" ### Group 13 ### [[Module]] Name = "Launch actions runner daemon" PriorityGroup = 13 RunPerInstance = true FatalOnError = false [Module.Command] Cmd = ["sudo", "/bin/launchctl", "load", "/Library/LaunchDaemons/com.justeattakeaway.actions-runner-service.plist"] RunAsUser = "ec2-user" Using a daemon instead of an agent (see Creating Launch Daemons and Agents), doesn’t require us to set up any auto-login which on macOS is a bit of a tricky procedure and is best avoided also for security reasons. The following is the content of the daemon for completeness. <?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"> <plist version="1.0"> <dict> <key>Label</key> <string>com.justeattakeaway.actions-runner-service</string> <key>ProgramArguments</key> <array> <string>/Users/ec2-user/actions-runner/runsvc.sh</string> </array> <key>UserName</key> <string>ec2-user</string> <key>GroupName</key> <string>staff</string> <key>WorkingDirectory</key> <string>/Users/ec2-user/actions-runner</string> <key>RunAtLoad</key> <true/> <key>StandardOutPath</key> <string>/Users/ec2-user/Library/Logs/com.justeattakeaway.actions-runner-service/stdout.log</string> <key>StandardErrorPath</key> <string>/Users/ec2-user/Library/Logs/com.justeattakeaway.actions-runner-service/stderr.log</string> <key>EnvironmentVariables</key> <dict> <key>ACTIONS_RUNNER_SVC</key> <string>1</string> </dict> <key>ProcessType</key> <string>Interactive</string> <key>SessionCreate</key> <true/> </dict> </plist> Not long after the deployment, all the steps above are executed and we can appreciate the runners appearing as connected. Multi-Team Use We start the downscaling at 11:59 PM on Fridays and start the upscaling at 6:00 AM on Mondays. These times have been chosen in a way that guarantees a level of service to teams in the UK, the Netherlands (GMT+1) and Canada (Winnipeg is on GMT-6) accounting for BST (British Summer Time) and DST (Daylight Saving Time) too. Times are defined in UTC in the GHA workflow triggers and the local time of the runner is not taken into account. Since the instances are used to build multiple projects and tools owned by different teams, one problem we faced was that instances could get compromised if workflows included unsafe steps (e.g. modifications to global configurations). GitHub Actions has a documentation page about Hardening self-hosted runners specifically stating: Self-hosted runners for GitHub do not have guarantees around running in ephemeral clean virtual machines, and can be persistently compromised by untrusted code in a workflow. We try to combat such potential problems by educating people on how to craft workflows and rely on the quick redeployment of the stack should the instances break. We also run scripts before and after each job to ensure that instances can be reused as much as possible. This includes actions like deleting the simulators’ content, derived data, caches and archives. Centralized Management via GitHub Actions The macOS runners stack is defined in a dedicated macOS-runners repository. We implemented GHA workflows to cover the use cases that allow teams to self-serve: create macOS AMI deploy CI downscale for the weekend* upscale for the working week* release unused hosts* * run without inputs and on a scheduled trigger The runners running the jobs in this repo are small t2.micro Linux instances and come with the AWSCLI installed. An IAM instance role with the correct policies is used to make sure that aws ec2 commands allocate-hosts, describe-hosts and release-hosts could execute and we used jq to parse the API responses. A note on VM runners In this article, we discussed how we’ve used bare metal instances as runners. We have spent a considerable amount of time investigating how we could leverage the Virtualization framework provided by Apple to create virtual machines via Tart. If you’ve grasped the complexity of crafting a CI system of runners on bare metal instances, you can understand that introducing VMs makes the setup sensibly more convoluted which would be best discussed in a separate article. While a setup with Tart VMs has been implemented, we realised that it’s not performant enough to be put to use. Using VMs, the number of runners would double but we preferred to have native performance as the slowdown is over 40% compared to bare metal. Moreover, when it comes to running heavy UI test suites like ours, tests became too flaky. Testing the VMs, we also realised that the standard values of Throughput and IOPS on the EBS volume didn’t seem to be enough and caused disk congestion resulting in an unacceptable slowdown in performance. Here is a quick summary of the setup and the challenges we have faced. Virtual runners require 2 images: one for the VMs (tart) and one for the host (AMI). We use Packer to create VM images (Vanilla, Base, IDE, Tools) with the software required based on the templates provided by Tart and we store the OCI-compliant images on ECR. We create these images on CI with dedicated workflows similar to the one described earlier for bare metal but, in this case, macOS runners (instead of Linux) are required as publishing to ECR is done with tart which runs on macOS. Extra policies are required on the instance role to allow the runner to push to ECR (using temporary_iam_instance_profile_policy_document in Packer’s Amazon EBS). Apple set a limit to the number of VMs that can be run on an instance to 2, which would allow to double the size of the fleet of runners. Creating AMIs hosting 2 VMs is done with Packer and steps include cloning the image from ECR and configuring macos-init modules to run daemons to run the VMs via Tart. Deploying a virtual CI infrastructure is identical to what has already been described for bare metal. Connecting to and interfacing with the VMs happens from within the host. Opening SSH and especially VNC sessions from within the bare metal instances can be very confusing. The version of macOS on the host and the one on the VMs could differ. The version used on the host must be provided with an AMI by AWS, while the version used on the VMs is provided by Apple in IPSW files (see ipsw.me). The GHA runners run on the VMs meaning that the host won’t require Xcode installed nor any other software used by the workflows. VMs don’t allow for provisioning meaning we have to share configurations with the VMs via shared folders on the host with the — dir flag which causes extra setup complexity. VMs can’t easily run the GHA runner as a service. The svc script requires the runner to be configured first, an operation that cannot be done during the provisioning of the host. We therefore need to implement an agent ourselves to configure and connect the runner in a single script. To have UI access (a-la VNC) to the VMs, it’s first required to stop the VMs and then run them without the --no-graphics flag. At the time of writing, copy-pasting won’t work even if using the --vnc or --vnc-experimental flags. Tartelet is a macOS app on top of Tart that allows to manage multiple GitHub Actions runners in ephemeral environments on a single host machine. We didn’t consider it to avoid relying on too much third-party software and because it doesn’t have yet GitHub Enterprise support. Worth noting that the Tart team worked on an orchestration solution named Orchard that seems to be in its initial stage. Conclusion In 2023 we have revamped and globalised our approach to CI. We have migrated from Jenkins to GitHub Actions as the CI/CD solution of choice for the whole group and have profoundly optimised and improved our pipelines introducing a greater level of job parallelisation. We have implemented a brand new scalable setup for bare metal macOS runners leveraging the HashiCorp tools Packer and Terraform. We have also implemented a setup based on Tart virtual machines. We have increased the size of our iOS team over the past few years, now including more than 40 developers, and still managed to be successful with only 5 bare metal instances on average, which is a clear statement of how performant and optimised our setup is. We have extended the capabilities of our Internal Developer Platform with a globalised approach to provide macOS runners; we feel that this setup will stand the test of time and serve well various teams across JET for years to come.

The idea of a Fastlane replacement

Prelude

Fastlane is widely used by iOS teams all around the world. It became the standard de facto to automate common tasks such as building apps, running tests, and uploading builds to App Store Connect. Fastlane has been recently moved under the Mobile Native Foundation which is amazing as Google

Prelude Fastlane is widely used by iOS teams all around the world. It became the standard de facto to automate common tasks such as building apps, running tests, and uploading builds to App Store Connect. Fastlane has been recently moved under the Mobile Native Foundation which is amazing as Google wasn't actively maintaining the project. At Just Eat Takeaway, we have implemented an extensive number of custom lanes to perform domain-specific tasks and used them from our CI. The major problem with Fastlane is that it's written in Ruby. When it was born, using Ruby was a sound choice but iOS developers are not necessarily familiar with such language which represents a barrier to contributing and writing lanes. While Fastlane.swift, a version of Fastlane in Swift, has been in beta for years, it's not a rewrite in Swift but rather a "solution on top" meaning that developers and CI systems still have to rely on Ruby, install related software (rbenv or rvm) and most likely maintain a Gemfile. The average iOS dev knows well that Ruby environments are a pain to deal with and have caused an infinite number of headaches. In recent years, Apple has introduced technologies that would enable a replacement of Fastlane using Swift: Swift Package Manager (SPM) Swift Argument Parser (SAP) Being myself a big fan of CLI tools written in Swift, I soon started maturing the idea of a Fastlane rewrite in Swift in early 2022. I circulated the idea with friends and colleagues for months and the sentiment was clear: it's time for a fresh simil-Fastlane tool written in Swift. Journey Towards the end of 2022, I was determined to start this project. I teamed up with 2 iOS devs (not working at Just Eat Takeaway) and we started working on a design. I was keen on calling this project "Swiftlane" but the preference seemed to be for the name "Interstellar" which was eventually shortened into "Stellar". Fastlane has the concept of Actions and I instinctively thought that in Swift-land, they could take the form of SPM packages. This would make Stellar a modular system with pluggable components. For example, consider the Scan action in Fastlane. It could be a package that solely solves the same problem around testing. My goal was not to implement the plethora of existing Fastlane actions but rather to create a system that allows plugging in any package building on macOS. A sound design of such system was crucial. The Stellar ecosystem I had in mind was composed of 4 parts: Actions Actions are the basic building blocks of the ecosystem. They are packages that define a library product. An action can do anything, from taking care of build tasks to integrating with GitHub. Actions are independent packages that have no knowledge of the Stellar system, which treats them as pluggable components to create higher abstractions. Ideally, actions should expose an executable product (the CLI tool) using SAP calling into the action code. This is not required by Stellar but it’s advisable as a best practice. Official Actions would be hosted in the Stellar organisation on GitHub. Custom Actions could be created using Stellar. Tasks Tasks are specific to a project and implemented by the project developers. They are SAP ParsableCommand or AsyncParsableCommand which use actions to construct complex logic specific to the needs of the project. Executor Executor is a command line tool in the form of a package generated by Stellar. It’s the entry point to the user-defined tasks. Invoking tasks on the Executor is like invoking lanes in Fastlane. Both developers and CI would interface with the Executor (masked as Stellar) to perform all operations. E.g. stellar setup_environment --developer-mode stellar run_unit_tests module=OrderHistory stellar setup_demo_app module=OrderHistory stellar run_ui_tests module=OrderHistory device="iPhone 15 Pro" Stellar CLI Stellar CLI is a command line tool that takes care of the heavy lifting of dealing with the Executor and the Tasks. It allows the integration of Stellar in a project and it should expose the following main commands: init: initialise the project by creating an Exectutor package in the .stellar folder build: builds the Executor generating a binary that is shared with the team members and used by CI create-action: scaffolding to create a new action in the form of a package create-task: scaffolding to create a new task in the form of a package edit: opens the Executor package for editing, similar to tuist edit This design was presented to a restricted group of devs at Just Eat Takeaway and it didn't take long to get an agreement on it. It was clear that once Stellar was completed, we would have integrated it in the codebase. Wider design I believe that a combination of CLI tools can create complex, templateable and customizable stacks to support the creation and growth of iOS codebases. Based on the experience developed at JET working on a large modular project with lots of packages, helper tools and optimised CI pipelines, I wanted Stellar to be eventually part of a set of tools taking the name “Stellar Tools” that could enable the creation and the management of large codebases. Something like the following: Tuist: generates projects and workspaces programmatically PackageGenerator: generates packages using a DSL Stacker: creates a modular iOS project based on a DSL Stellar: automate tasks Workflows: generates GitHub Actions workflows that use Stellar From my old notes: Current state After a few months of development within this team (made of devs not working at Just Eat Takeaway), I realised things were not moving in the direction I desired and I decided it was not beneficial to continue the collaboration with the team. We stopped working on Stellar mainly due to different levels of commitment from each of us and focus on the wrong tasks signalling a lack of project management from my end. For example, a considerable amount of time and effort went into the implementation of a version management system (vastly inspired by the one used in Tuist) that was not part of the scope of the Stellar project. The experience left me bitter and demotivated, learning that sometimes projects are best started alone. We made the repo public on GitHub aware that it was far from being production-ready but in my opinion, it's no doubt a nice, inspiring, MVP. GitHub - StellarTools/Stellar Contribute to StellarTools/Stellar development by creating an account on GitHub. GitHubStellarTools GitHub - StellarTools/ActionDSL Contribute to StellarTools/ActionDSL development by creating an account on GitHub. GitHubStellarTools The intent was then to progress on my own or with my colleagues at JET. As things evolved in 2023, we embarked on big projects that continued to evolve the platform such as a massive migration to GitHub Actions. To this day, we still plan to remove Fastlane as our vision is to rely on external dependencies as little as possible but there is no plan to use Stellar as-is. I suspect that, for the infrastructure team at JET, things will evolve in a way that sees more CLI tools being implemented and more GitHub actions using them.

CloudWatch dashboards and alarms on Mac instances

CloudWatch is great for observing and monitoring resources and applications on AWS, on premises, and on other clouds.

While it's trivial to have the agent running on Linux, it's a bit more involved for mac instances (which are commonly used as CI workers). The support was

CloudWatch is great for observing and monitoring resources and applications on AWS, on premises, and on other clouds. While it's trivial to have the agent running on Linux, it's a bit more involved for mac instances (which are commonly used as CI workers). The support was announced in January 2021 for mac1.metal (Intel/x86_64) and I bumped into some challenges on mac2.metal (M1/ARM64) that the team at AWS helped me solve (see this issue on the GitHub repo). I couldn't find other articles nor precise documentation from AWS which is why I'm writing this article to walk you through a common CloudWatch setup. The given code samples are for the HashiCorp tools Packer and Terraform and focus on mac2.metal instances. I'll cover the following steps: install the CloudWatch agent on mac2.metal instances configure the CloudWatch agent create a CloudWatch dashboard setup CloudWatch alarms setup IAM permissions Install the CloudWatch agent The CloudWatch agent can be installed by downloading the pkg file listed on this page and running the installer. You probably want to bake the agent into your AMI, so here is the Packer code for mac2.metal (ARM): # Install wget via brew provisioner "shell" { inline = [ "source ~/.zshrc", "brew install wget" ] } # Install CloudWatch agent provisioner "shell" { inline = [ "source ~/.zshrc", "wget https://s3.amazonaws.com/amazoncloudwatch-agent/darwin/arm64/latest/amazon-cloudwatch-agent.pkg", "sudo installer -pkg ./amazon-cloudwatch-agent.pkg -target /" ] } For the agent to work, you'll need collectd (https://collectd.org/) to be installed on the machine, which is usually done via brew. Brew installs it at /opt/homebrew/sbin/. This is also a step you want to perform when creating your AMI. # Install collectd via brew provisioner "shell" { inline = [ "source ~/.zshrc", "brew install collectd" ] } Configure the CloudWatch agent In order to run, the agent needs a configuration which can be created using the wizard. This page defines the metric sets that are available. Running the wizard with the command below will allow you to generate a basic json configuration which you can modify later. sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-config-wizard The following is a working configuration for Mac instances so you can skip the process. { "agent": { "metrics_collection_interval": 60, "run_as_user": "root" }, "metrics": { "aggregation_dimensions": [ [ "InstanceId" ] ], "append_dimensions": { "AutoScalingGroupName": "${aws:AutoScalingGroupName}", "ImageId": "${aws:ImageId}", "InstanceId": "${aws:InstanceId}", "InstanceType": "${aws:InstanceType}" }, "metrics_collected": { "collectd": { "collectd_typesdb": [ "/opt/homebrew/opt/collectd/share/collectd/types.db" ], "metrics_aggregation_interval": 60 }, "cpu": { "measurement": [ "cpu_usage_idle", "cpu_usage_iowait", "cpu_usage_user", "cpu_usage_system" ], "metrics_collection_interval": 60, "resources": [ "*" ], "totalcpu": false }, "disk": { "measurement": [ "used_percent", "inodes_free" ], "metrics_collection_interval": 60, "resources": [ "*" ] }, "diskio": { "measurement": [ "io_time", "write_bytes", "read_bytes", "writes", "reads" ], "metrics_collection_interval": 60, "resources": [ "*" ] }, "mem": { "measurement": [ "mem_used_percent" ], "metrics_collection_interval": 60 }, "netstat": { "measurement": [ "tcp_established", "tcp_time_wait" ], "metrics_collection_interval": 60 }, "statsd": { "metrics_aggregation_interval": 60, "metrics_collection_interval": 10, "service_address": ":8125" }, "swap": { "measurement": [ "swap_used_percent" ], "metrics_collection_interval": 60 } } } } I have enhanced the output of the wizard with some reasonable metrics to collect. The configuration created by the wizard is almost working but it's lacking a fundamental config to make it work out-of-the-box: the collectd_typesdb value. Linux and Mac differ when it comes to the location of collectd and types.db, and the agent defaults to the Linux path even if it was built for Mac, causing the following error when trying to run the agent: ======== Error Log ======== 2023-07-23T04:57:28Z E! [telegraf] Error running agent: Error loading config file /opt/aws/amazon-cloudwatch-agent/etc/amazon-cloudwatch-agent.toml: error parsing socket_listener, open /usr/share/collectd/types.db: no such file or directory Moreover, the /usr/share/ folder is not writable unless you disable SIP (System Integrity Protection) which cannot be done on EC2 mac instances nor is something you want to do for security reasons. The final configuration is something you want to save in System Manager Parameter Store using the ssm_parameter resource in Terraform: resource "aws_ssm_parameter" "cw_agent_config_darwin" { name = "/cloudwatch-agent/config/darwin" description = "CloudWatch agent config for mac instances" type = "String" value = file("./cw-agent-config-darwin.json") } and use it when running the agent in a provisioning step: resource "null_resource" "run_cloudwatch_agent" { depends_on = [ aws_instance.mac_instance ] connection { type = "ssh" agent = false host = aws_instance.mac_instance.private_ip user = "ec2-user" private_key = tls_private_key.mac_instance.private_key_pem timeout = "30m" } # Run CloudWatch agent provisioner "remote-exec" { inline = [ "sudo /opt/aws/amazon-cloudwatch-agent/bin/amazon-cloudwatch-agent-ctl -a fetch-config -m ec2 -s -c ssm:${aws_ssm_parameter.cw_agent_config_darwin.name}" ] } } Create a CloudWatch dashboard Once the instances are deployed and running, they will send events to CloudWatch and we can create a dashboard to visualise them. You can create a dashboard manually in the console and once you are happy with it, you can just copy the source code, store it in a file and feed it to Terraform. Here is mine that could probably work for you too if you tag your instances with the Type set to macOS: { "widgets": [ { "height": 15, "width": 24, "y": 0, "x": 0, "type": "explorer", "properties": { "metrics": [ { "metricName": "cpu_usage_user", "resourceType": "AWS::EC2::Instance", "stat": "Average" }, { "metricName": "cpu_usage_system", "resourceType": "AWS::EC2::Instance", "stat": "Average" }, { "metricName": "disk_used_percent", "resourceType": "AWS::EC2::Instance", "stat": "Average" }, { "metricName": "diskio_read_bytes", "resourceType": "AWS::EC2::Instance", "stat": "Average" }, { "metricName": "diskio_write_bytes", "resourceType": "AWS::EC2::Instance", "stat": "Average" } ], "aggregateBy": { "key": "", "func": "" }, "labels": [ { "key": "Type", "value": "macOS" } ], "widgetOptions": { "legend": { "position": "bottom" }, "view": "timeSeries", "stacked": false, "rowsPerPage": 50, "widgetsPerRow": 1 }, "period": 60, "splitBy": "", "region": "eu-west-1" } } ] } You can then use the cloudwatch_dashboard resource in Terraform: resource "aws_cloudwatch_dashboard" "mac_instances" { dashboard_name = "mac-instances" dashboard_body = file("./cw-dashboard-mac-instances.json") } It will show something like this: Setup CloudWatch alarms Once the dashboard is up, you should set up alarms so that you are notified of any anomalies, rather than actively monitoring the dashboard for them. What works for me is having alarms triggered via email when the used disk space is going above a certain level (say 80%). We can use the cloudwatch_metric_alarm resource. resource "aws_cloudwatch_metric_alarm" "disk_usage" { alarm_name = "mac-${aws_instance.mac_instance.id}-disk-usage" comparison_operator = "GreaterThanThreshold" evaluation_periods = 30 metric_name = "disk_used_percent" namespace = "CWAgent" period = 120 statistic = "Average" threshold = 80 alarm_actions = [aws_sns_topic.disk_usage.arn] dimensions = { InstanceId = aws_instance.mac_instance.id } } We can then create an SNS topic and subscribe all interested parties to it. This will allow us to broadcast to all subscribers when the alarm is triggered. For this, we can use the sns_topic and sns_topic_subscription resources. resource "aws_sns_topic" "disk_usage" { name = "CW_Alarm_disk_usage_mac_${aws_instance.mac_instance.id}" } resource "aws_sns_topic_subscription" "disk_usage" { for_each = toset(var.alarm_subscriber_emails) topic_arn = aws_sns_topic.disk_usage.arn protocol = "email" endpoint = each.value } variable "alarm_subscriber_emails" { type = list(string) } If you are deploying your infrastructure via GitHub Actions, you can set your subscribers as a workflow input or as an environment variable. Here is how you should pass a list of strings via a variable in Terraform: name: Deploy Mac instance env: ALARM_SUBSCRIBERS: '["user1@example.com","user2@example.com"]' AMI: ... jobs: deploy: ... steps: - name: Terraform apply run: | terraform apply \ --var ami=${{ env.AMI }} \ --var alarm_subscriber_emails='${{ env.ALARM_SUBSCRIBERS }}' \ --auto-approve Setup IAM permissions The instance that performs the deployment requires permissions for CloudWatch, System Manager, and SNS. The following is a policy that is enough to perform both terraform apply and terraform destroy. Please consider restricting to specific resources. { "Version": "2012-10-17", "Statement": [ { "Sid": "CloudWatchDashboardsPermissions", "Effect": "Allow", "Action": [ "cloudwatch:DeleteDashboards", "cloudwatch:GetDashboard", "cloudwatch:ListDashboards", "cloudwatch:PutDashboard" ], "Resource": "*" }, { "Sid": "CloudWatchAlertsPermissions", "Effect": "Allow", "Action": [ "cloudwatch:DescribeAlarms", "cloudwatch:DescribeAlarmsForMetric", "cloudwatch:DescribeAlarmHistory", "cloudwatch:DeleteAlarms", "cloudwatch:DisableAlarmActions", "cloudwatch:EnableAlarmActions", "cloudwatch:ListTagsForResource", "cloudwatch:PutMetricAlarm", "cloudwatch:PutCompositeAlarm", "cloudwatch:SetAlarmState" ], "Resource": "*" }, { "Sid": "SystemsManagerPermissions", "Effect": "Allow", "Action": [ "ssm:GetParameter", "ssm:GetParameters", "ssm:ListTagsForResource", "ssm:DeleteParameter", "ssm:DescribeParameters", "ssm:PutParameter" ], "Resource": "*" }, { "Sid": "SNSPermissions", "Effect": "Allow", "Action": [ "sns:CreateTopic", "sns:DeleteTopic", "sns:GetTopicAttributes", "sns:GetSubscriptionAttributes", "sns:ListSubscriptions", "sns:ListSubscriptionsByTopic", "sns:ListTopics", "sns:SetSubscriptionAttributes", "sns:SetTopicAttributes", "sns:Subscribe", "sns:Unsubscribe" ], "Resource": "*" } ] } On the other hand, to send logs to CloudWatch, the Mac instances require permissions given by the CloudWatchAgentServerPolicy: resource "aws_iam_role_policy_attachment" "mac_instance_iam_role_cw_policy_attachment" { role = aws_iam_role.mac_instance_iam_role.name policy_arn = "arn:aws:iam::aws:policy/CloudWatchAgentServerPolicy" } Conclusion You have now defined your CloudWatch dashboard and alarms using "Infrastructure as Code" via Packer and Terraform. I've covered the common use case of instances running out of space on disk which is useful to catch before CI starts becoming unresponsive slowing your team down.

Easy connection to AWS Mac instances with EC2macConnector

Overview

Amazon Web Services (AWS) provides EC2 Mac instances commonly used as CI workers. Configuring them can be either a manual or an automated process, depending on the DevOps and Platform Engineering experience in your company. No matter what process you adopt, it is sometimes useful to log into the